7 pytest Plugins You Must Definitely Use

The best pytest plugins you should start using today

TL;DR: In this guide, I’ll present the top 7 pytest plugins I find indispensable. They made my testing experience 10x better. No matter how complex your Python project, you can always benefit from one or more of them.

pytest is a Python testing framework that has been growing a lot. It’s simplicity and flexibility overshadow all the other Python testing libraries. Its simplicity makes tests less verbose and cleaner. And when it comes to flexibility, you are best served. You can easily extend its functionalities in a myriad way via plugins. And that’s what we are going to talk about here.

1. pytest-mock

pytest-mock is a pytest plugin that wraps around the standard unittest.mock package as a fixture. It makes patching objects or functions by replacing it with a Mock object. It’s cleaner, easier and simpler than unittest.mock.patch. It also provides spy and stub utilities that are not present in the unittest module. The list of nice features doesn’t stop there, here’s what pytest-mock can do for you:

- It undoes the mocking automatically after the end of the test.

- Provides a mocker fixture to patch functions instead of context managers or decorators.

- It has improved reporting of mock call assertion errors

Let’s consider the following examples taken and adapted from its docs. The first example illustrates the usage of mock.patch using decorators. It’s very verbose and the order of the arguments is in reverse to the order of the decorated patch functions. Following that, we contrast it with pytest-mock.

@mock.patch('os.remove')

@mock.patch('os.listdir')

@mock.patch('shutil.copy')

def test_unix_fs(mocked_copy, mocked_listdir, mocked_remove):

UnixFS.rm('file')

os.remove.assert_called_once_with('file')

assert UnixFS.ls('dir') == expected

# ...

UnixFS.cp('src', 'dst')

# ...

With pytest-mock:

def test_unix_fs(mocker):

mocked_remove = mocker.patch('os.remove')

UnixFS.rm('file')

mocked_remove.assert_called_once_with('file')

listdir = mock.patch('os.listdir')

assert UnixFS.ls('dir') == expected

# ...

UnixFS.cp('src', 'dst')

Even though the benefits might not seem evident, using the mocker helps, at least, in three different ways:

- It reduces the risk of swapping arguments order and introducing a bug

- It undoes the mocking during the test execution

- It allows a better integration with pytest fixtures and

pytest.mark.parametrizefeature.

2. pytest-cov

Measuring your code coverage is important for several reasons. It helps you identify parts of code that have been executed by your tests. By using a code coverage tool you can:

- Spot old, unused code

- Revels test omissions

- Create a quality measure of your code

The most popular package to measure coverage in Python is coverage.py. pytest-cov is a pytest plugin that uses coverage.py underneath, but goes a little further. It has subprocess support, allowing you to get coverage of things you run in a subprocess. And also, it has a more consistent pytest behavior and offers all features available in the coverage.py library.

The usage is as simple as running...

pytest --cov=my-python-project tests/

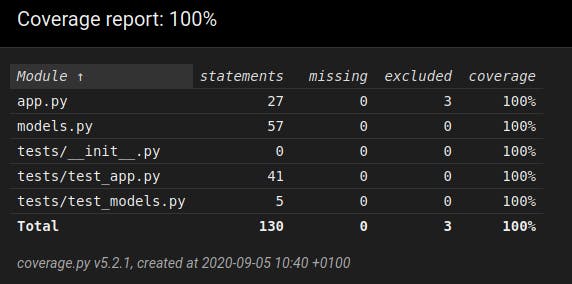

... producing the following report:

============================= test session starts ==============================

platform linux -- Python 3.6.3, pytest-4.0.2, py-1.7.0, pluggy-0.8.0

plugins: cov-2.6.0

collected 18 items

tests/test_app.py ............... [ 83%]

tests/test_models.py ... [100%]

----------- coverage: platform linux, python 3.6.3-final-0 -----------

Name Stmts Miss Cover

---------------------------------------------

app.py 27 0 100%

models.py 57 0 100%

tests/__init__.py 0 0 100%

tests/test_app.py 41 0 100%

tests/test_models.py 5 0 100%

---------------------------------------------

TOTAL 130 0 100%

========================== 18 passed in 11.25 seconds ==========================

You can also generate HTML reports by passing the option --cov-report=html. It produces a nice coverage report that you can navigate and inspect the code. The report displays which parts of the code are covered and which ones are missed.

3. pytest-django

Django is one of the most popular frameworks for building web apps in Python. It not only has several features but also a great documentation. In fact, its docs is considered by many one of the best out there.

One great built-in feature is it’s extension of the unittest module. Whenever you need to write a test in Django you can use django.test.TestCase, which is a subclass of unittest.TestCase. Despite being very good it suffers from the same warts of unittest.

To make Django tests more idiomatic and flexible, pytest-django was created. pytest-django is a plugin that simplifies your Django tests and provides some handy fixtures. Additionally, it provides all of Django’s TestCase assertions. Now, take a look at a sample of its best features:

pytest Markers

The plugin comes with a nice set of markers such as pytest.mark.django_db. This marker allows the test to use the database. Each test run in an individual transaction that is rollback when the test finishes.

Fixtures

pytest-django bundles both django.test.RequestFactory and django.test.Client as fixtures. The former allows the generation of a request instance that you can use to test views. The latter works as a Web browser, which proves to be very useful when testing your Django application. It can simulate GETs and POSTs and inspect the response, including headers and status code.

Other fixtures provided include django_assert_num_queries, to get the expected number of DB queries; admin_user, which is a superuser; mailoutbox, an e-mail outbox to assert that the emails sent using Django are sent.

4. pytest-asyncio

asyncio is a package that has been part of Python’s standard library since version 3.4. It’s a great way to write asynchronous code, allowing IO-bound applications to perform at its best. pytest-asyncio is a great plugin that makes it easier to test asynchronous programs. As most pytest plugins, it provides fixtures for injecting the asyncio event loop and unused tcp ports into the test cases. It also allows the creation of async fixtures.

Another excellent feature is the asyncio test marker. By marking your test as pytest.mark.asyncio, pytest will execute it as an asynchronous task using event_loop fixture. The example below shows an async test case.

@pytest.mark.asyncio

async def test_example():

"""With pytest.mark.asyncio!"""

await asyncio.sleep(10)

5. pytest-randomly

One of the most common test smell is the dependent tests smell. It consists in creating a set of tests that rely on a certain order. By introducing inter-dependency between tests, you prevent them from running in parallel and may also hide bugs. Generally, a unit test case should test a unit of behavior. And it’s considered a good practice to make them as isolated as possible.

However, just knowing that is not enough. You may introduce dependent tests unknowingly. And that’s where pytest-randomly comes into play. pytest-randomly randomly order your tests by resetting the random seed to a repeatable number for each test. By randomly ordering the tests, you greatly reduce the risk of a potentially unknown inter-test dependency.

6. pytest-clarity

pytest does a great job outputting test failures. Compared to unittest pytest's output is way clearer and very detailed. You can also tune the amount of information that can be displayed by tweaking the verbosity level. However, despite its best effort, sometimes the output of an assertion error can be very messy.

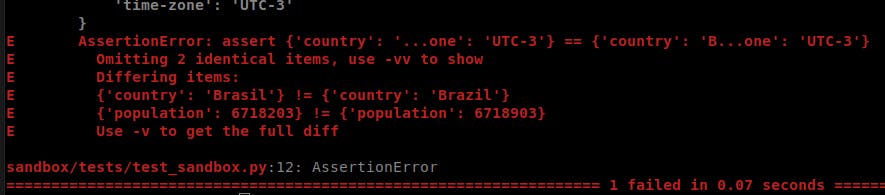

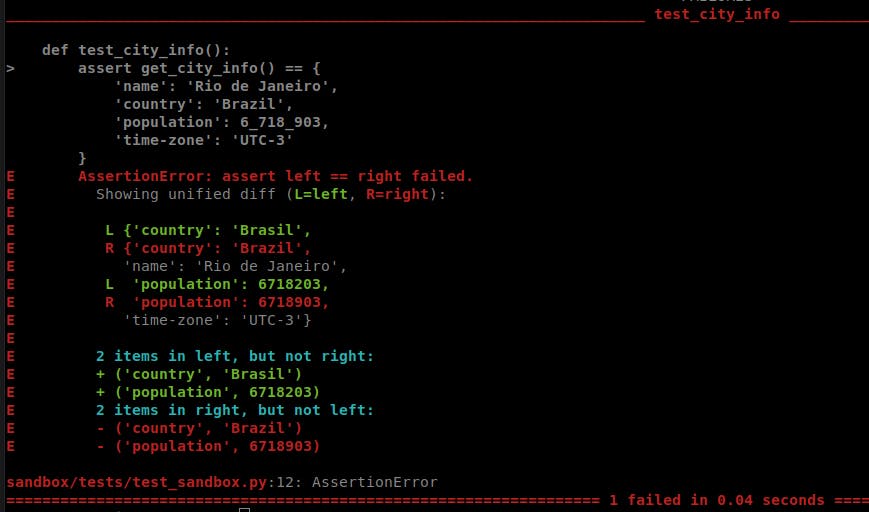

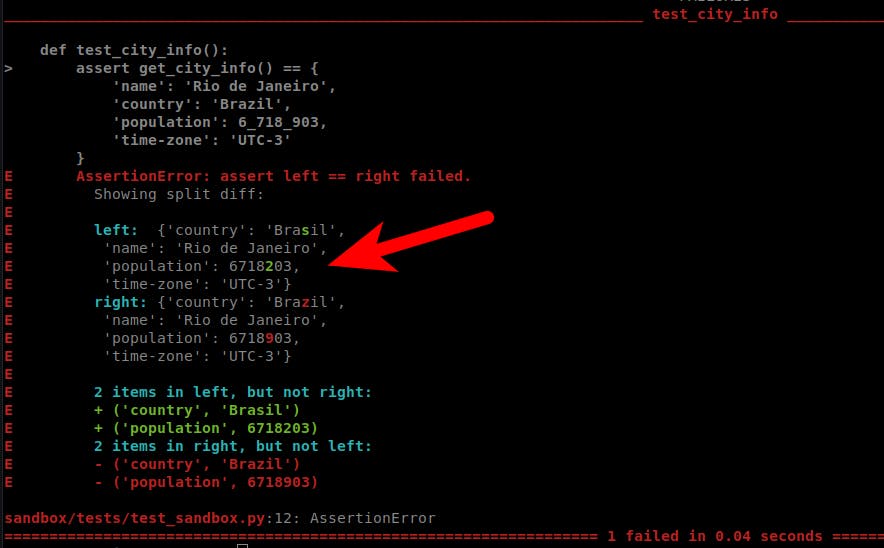

pytest-clarity is plugin built to improve pytest output by enabling a more understandable diff for tests failures. It enhances it by providing useful hints, displaying unified or split diffs. Tracking down a test failure becomes much easier and painless. Here's a simple example, compare the regular pytest output:

...and with pytest-clarity using unified view:

And with split view:

Spotting the diff is much easier now. pytest-clarity is definitely worth trying.

7. pytest-bdd

Behavior-driven development (BDD) is a testing methodology that was born as an extension of test-driven development (TDD). The idea behind it is to use a simple domain-specific scripting language to create executable tests from natural language statements. These statements aim at bridging the gap between business aspect and the code. Instead of defining a test purely in an AAA (Arrange, Act, Assert) pattern, it describes a test behavior in terms of user stories.

One famous DSL used in BDD in the Gherkin format. Gherkin was built to be precise enough to allow business rules description in most real-world domains. As example, let’s consider the scenario of publishing a article in a blog. You can describe it using the following structure:

Feature: Blog

A site where you can publish your articles.

Scenario: Publishing the article

Given I'm an author user

And I have an article

When I go to the article page

And I press the publish button

Then I should not see the error message

And the article should be published # Note: will query the database

pytest-bdd is a pytest plugin that enables BDD by implementing a subset of the Gherkin language. It has many advantages over other BDD tools, for instance:

- It enables unifying unit and functional tests

- It allows test setup re-usability through fixtures

- It does not require a separate runner

- It leverages the simplicity and flexibility of pytest

pytest-bddalso has one of the best documentation you can find. The README alone has tons of content that will get you up and running pretty quickly.

Conclusion

That’s it for today. I hope this small list of pytest plugins can be useful for you, just as they are for me. pytest is a really delightful testing framework that has extensibility in its core.

Other posts you may like:

Learn how to unit test REST APIs in Python with Pytest by example.

7 pytest Features and Plugins That Will Save You Tons of Time

This post was originally published at https://miguendes.me